🍃Generate Tabular Synthetic Data with TGANs

About GANs

Generative Adversarial Network, commonly known as "GAN", is gaining increasing popularity worldwide. They have been applied across a wide range of fields, including music generation, style transfer in paintings, the creation of realistic gaming environments, virtual try-ons in the fashion industry, deepfakes, poem writing, and more.

While GANs have found extensive use in manipulating image and text data, a question arises: can they be effectively applied to tabular data? In this stop, we will explore the generation of synthetic tabular data through TGANs (Tabular GANs). Before delving into this, let's gain a brief understanding of how GANs work.

Generator vs Discriminator

All the GANs contain 2 key components: generator and discriminator.

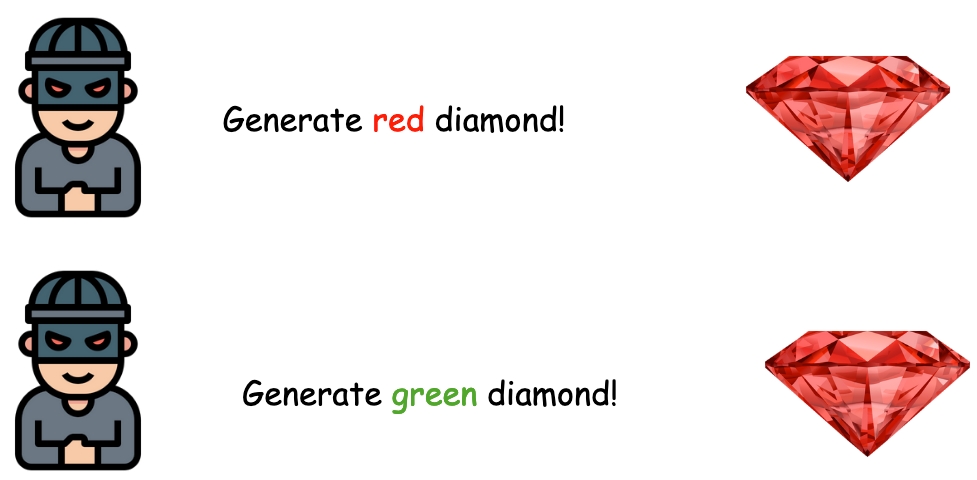

Their roles are like a criminal and a police. The criminal (generator) creates fake diamonds and the police (discriminator) needs to distinguish between the real diamonds and the fake ones. Both of the criminal and the police will enhance their skills through iterative trainings until, finally, the police can hardly differentiate between the real diamonds and the counterfeits.

DCGAN

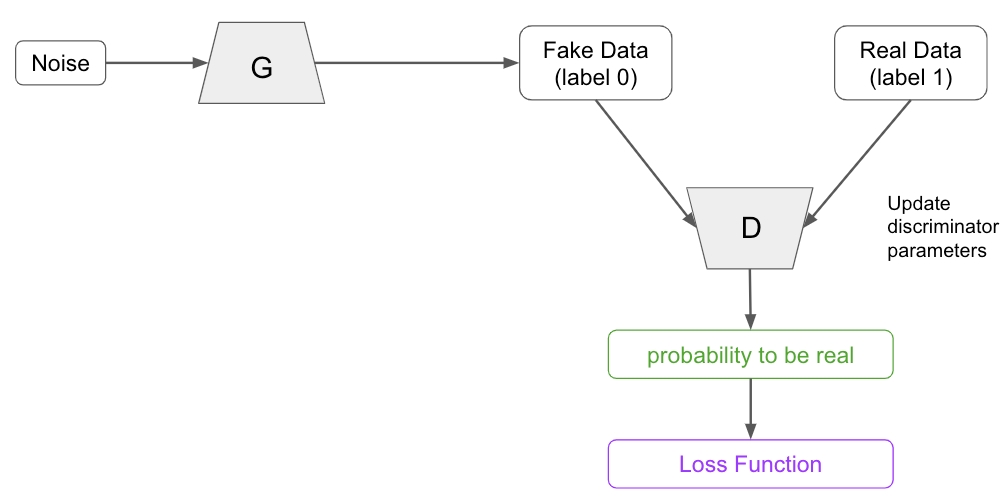

To understand GANs, we can start from DCGAN (Deep Convolutional GAN), as it's like the vanilla version of GANs. DCGAN kept executing 2 training steps iteratively:

Step 1: train the discriminator.

In this step, the generator gets the noise data as input and output a set of fake data labeled as "0". Meanwhile, there is a set of real data labeled as "1". Both real data and fake data are the input of discriminator. The discriminator will update its parameters after the training and output predicted probabilities (probabilities of being the real class) that later sent to loss function to evaluate.

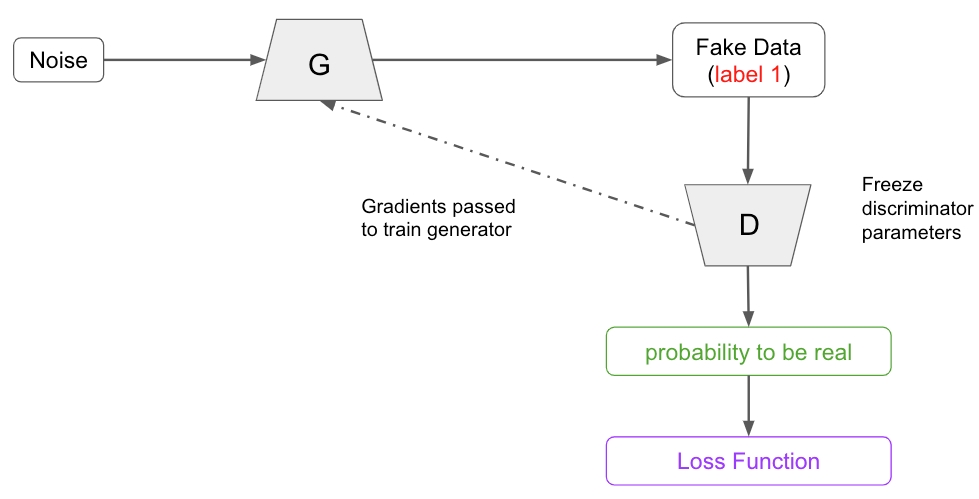

Step 2: adversarial training, train the generator.

Unlike standard backpropagation, where gradients are used to update the discriminator's parameters, here the discriminator's parameters remain frozen. Instead, the gradients are directed toward the generator to improve its learning process. The trained generator then produces a new set of fake data, labels it as "1" and sends it to the discriminator for a real vs. fake classification.

These 2 steps are executed iteratively until the discriminator can hardly distinguish the real data and the synthetic.

After DCGAN, numerous improvements had been made to enhance GANs' performance. For example:

CGAN (Conditional GAN) allows you to decide the output class.

Training GANs is notoriously challenging due to the delicate balance they strive to maintain between 2 competing components, the generator and the discriminator. Throughout this process, stability issues such as gradient vanishing and mode collapse frequently arise.

Gradient Vanishing occurs when the discriminator becomes confident in its classification, ceasing to update parameters. The gradients become small and diminish significantly as they propagate to the generator layer, leading to the failure of the generator to converge.

Mode Collapse occurs when the generator consistently produces identical or a limited variety of outputs, failing to sufficiently capture the full diversity of the target data.

WGAN (Wasserstein GAN), LSGAN (Least Squares GAN) were designed to address such stability challenges by employing new loss functions, in contrast to CDGAN.

LSGAN also helps improve output images' perceptive quality, so does ACGAN (Auxiliary Classifier GAN).

What's noteworthy is the architecture of ACGAN, it added a classifier to the discriminator. Rather than solely predicting the probability of being real, the discriminator is also tasked with classifying the classes of the output. The underlying assumption is that by introducing this additional classification task, the quality of the output can be enhanced.

Disentangled Representation GANs like InforGAN, StackedGAN allow you to change the output properties, such as shape, rotation, thickness, etc.

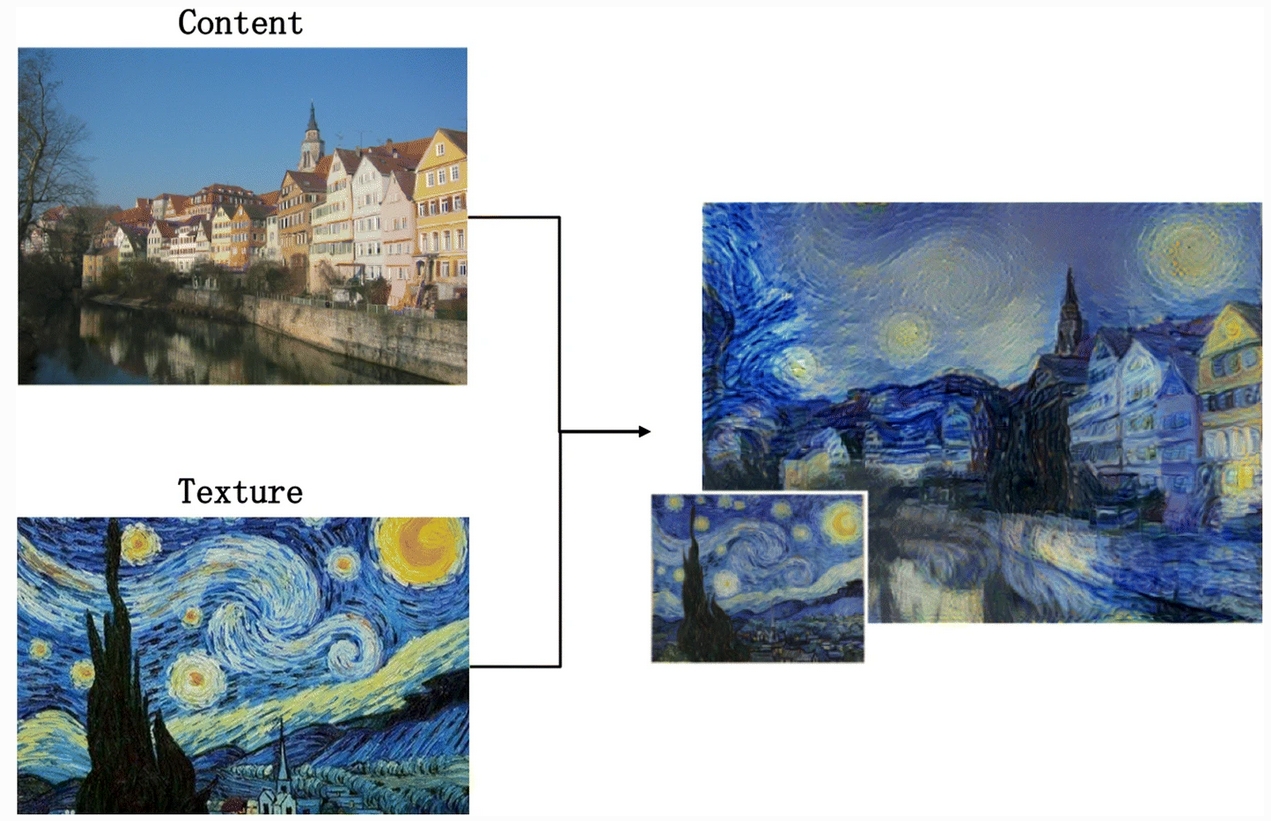

Cross-Domain GANs like CycleGAN, CyCADA (Cycle-Consistent Adversarial Domain Adaptation) enable the style transfer of the input. Have you ever played with a popular game shown below? By providing a photo, you can change it to a selected painting style:

Why Synthetic Tabular Data?

There are several benefits of using synthetic tabular data across different industries. For example:

Enabling data privacy and ethical AI

Health researchers use synthetic tabular data to protect real patients' data while conducting their research.

Banks build machine learning models on synthetic tabular data to protect customer data privacy.

Data simulation is often used when existing real data has limited scenarios

Banks simulate synthetic data to create more real-world scenarios, such as customer behavior data to improve personalized banking services and customer interactions to enhance relationships.

Supply chain systems simulate synthetic data to mimic the supply chain process, optimizing logistics, production schedules, and inventory management.

Have you ever used synthetic tabular data? Welcome to share your experience or ideas here!

Tabular GANs

Numerous GANs (Generative Adversarial Networks) have been applied to image and text data. However, given the significance role that tabular data plays in data science, can GANs be employed to generate synthetic tabular data? Indeed, there are, and these GANs are called as TGANs (Tabular GANs).

CTGAN

CTGAN (Conditional Tabular GAN) was published in 2019, and later multiple promising Tabular GANs were built upon it, so we can consider it as the vanilla version of Tabular GANs!

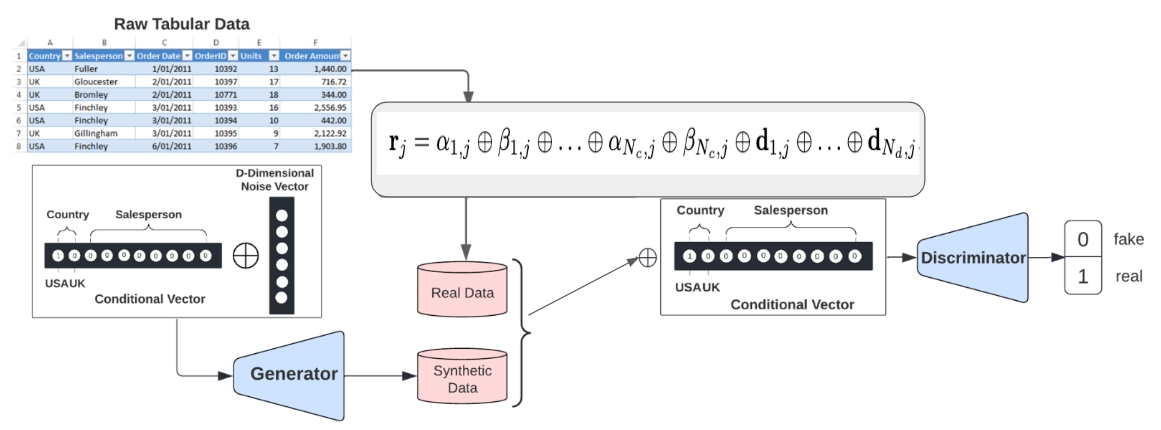

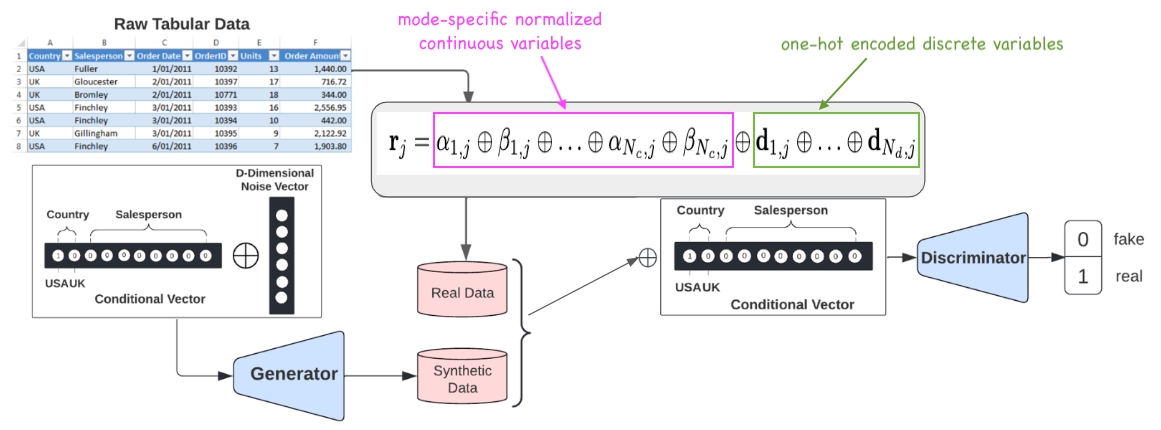

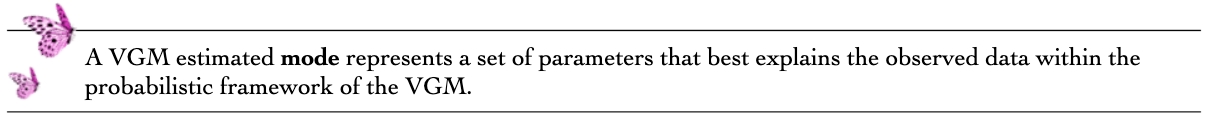

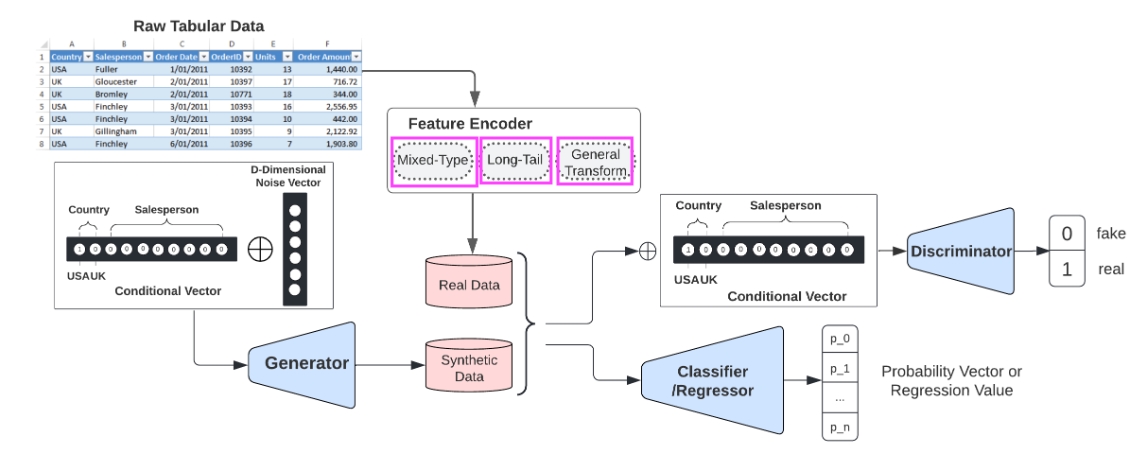

The architecture of CTGAN can be summarized as below:

Comparing with the architecture of DCGAN (the vanilla version of GANs), it has 2 major changes: Mode-specific Normalization and Train-by-Sample & Conditional Vector.

Tabular data is typically a combination of discrete variables and continuous variables. Discrete variables can be represented as one-hot format and be processed by GANs directly. However, the challenge lies in effectively processing the more intricate distributions inherent in continuous variables. Therefore, CTGAN proposes Mode-specific Normalization to address this challenge.

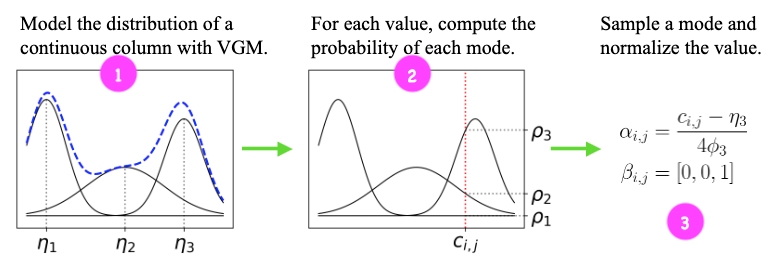

Let's delve deeper into Mode-specific Normalization by breaking it down into 3 steps:

Apply VGM (variational Gaussian mixture model) to estimate the number of modes in a continuous variable's distribution, and fit into a Gaussian mixture, the learned Gaussian mixture forms a normal distribution for each mode. In the example above, the continuous variable distribution represented by a blue dashed curve has 3 estimated modes, so 3 normal distributions got created.

Each value of this continuous variable can be represented as

Ci,j(ith column, jth row in the Tabular data), plot it on the Gaussian mixture and get the probability of each mode. Choose the mode with the highest probability. In the example aboveρ3is the highest probability, so the 3rd mode is selected.Use the selected mode's mean

ηand standard deviationφto calculate a normalized valueαi,j = (Ci,j - η) / (4 * φ), representing the original value in the mode. Meanwhile, use a binary vectorβi,jto record which mode was selected. In above example, the 3rd one was selected soβi,j = [0, 0, 1].

Now the original value Ci,j is encoded as a vector through αi,j ⊕ βi,j where ⊕ is the vector concatenation operator.

Traditionally, the generator may not be trained well if there are imbalanced discrete variables, because the minority categories can't be sampled evenly. Train-by-Sample & Conditional Vector is trying to evenly (but not necessary uniformly) explore all categories in each discrete variable during training process and recover the real data distribution during test. "Conditional vector" means, given a particular categorical value, its rows in the real data have to appear in the data sample. Generator trained by such conditional distributions is called as "conditional generator", which is also why CTGAN is named as "conditional".

CTABGAN+

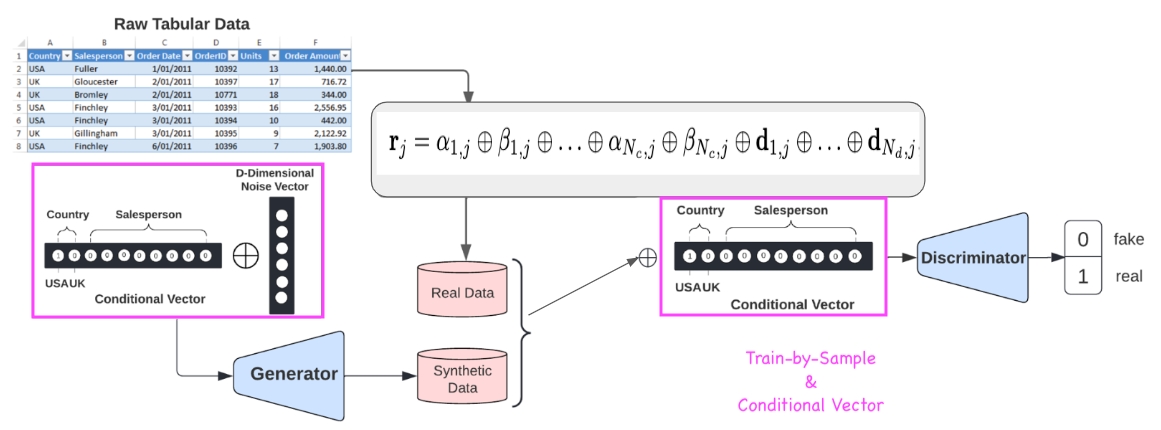

CTABGAN+ was published in 2022. See its architecture below, looks quite like CTGAN's architectures, right? Comparing with CTGAN, it has made 2 major changes. Let's look into details!

First of all, CTABGAN+ added an extra estimator to predict each variable of the tabular data. For discrete variable, it predicts the probability of each category, for continuous variable, it predicts the regression value. The assumption behind is the same as the ACGAN mentioned before, by introducing this auxiliary estimator, the quality of the output can be enhanced.

The second major change happened in feature encoding:

Mixed-Type is the same as CTGAN's Mode-specific Normalization, aiming at representing both discrete and continuous variables well.

Long-Tail applies log transform to better handle continuous variables with long tails. Because VGM used in Mode-specific Normalization has difficulty to encode values towards the tail, with log transform it can compress and reduce the distances between the tail and the bulk data, allowing VGM to encode all the data easier.

General Transform (GT) encodes a variable into (-1, 1) range so that the encoding directly compatible with the output of

tanhactivation function used by the generator. GT is selectively applied to single mode continuous variables because VGM can't effectively handle such variables. At the same time, because GT doesn't provide the mode indicator, applying VGM to multi-mode continuous variables is more effective than using GT. GT is also applied on high cardinality discrete variables, because a high cardinality variable has too many unique values and can cause dimensionality explosion after one-hot encoding. However, GT is not recommended for other discrete variables, because, comparing with one-hot vectors, its integer output can impose artificial distances between the different categories which do not reflect the reality.

CasTGAN

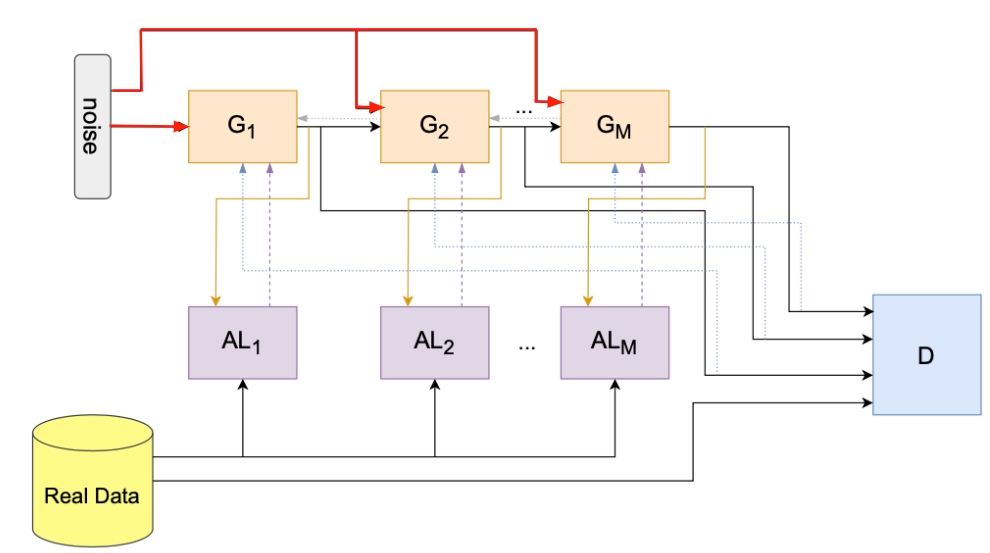

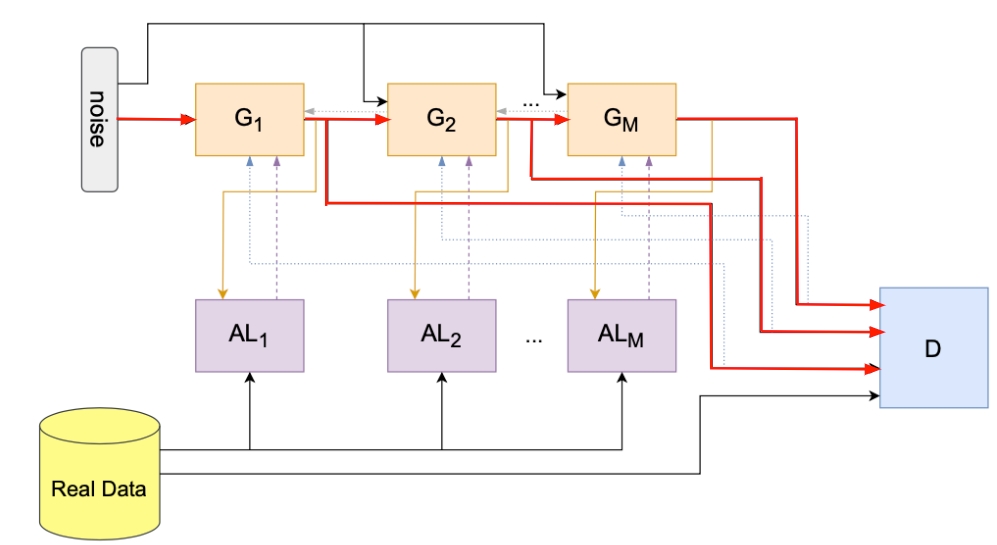

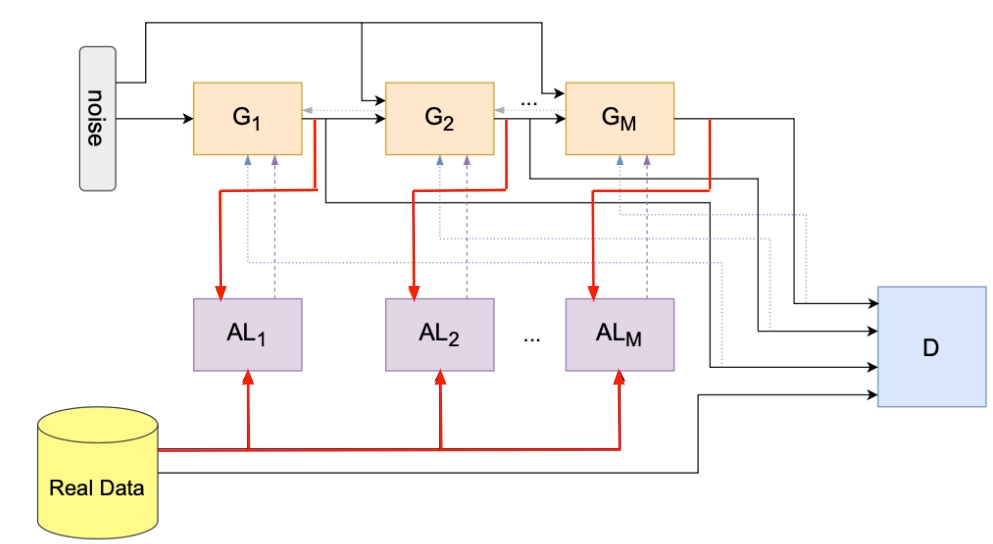

CasTGAN (Cascade Tabular GAN) was published in 2023, it introduced a cascaded architecture:

The architecture looks complex, but it's still a combination of generator, discriminator and auxiliary estimator. Let's break it down!

Firstly, you're seeing CasTGAN has several generators (marked as orange squares), they get the same noise input.

The generators are arranged in a sequence, each generator produces one of the variables in the tabular data and passes the generated variable to the next generator. Consequently, the last generator sends all the generated variables to the discriminator (marked as blue square).

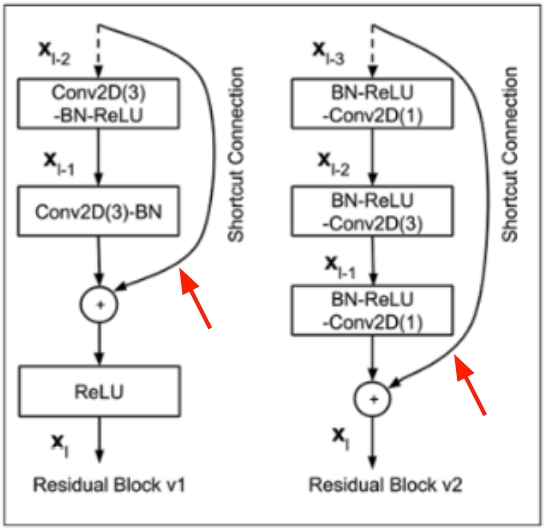

Meanwhile, you must have noticed each generator connects to the discriminator. If the last generator sends all the output variables to the discriminator, why does CasTGAN have each generator communicates to the discriminator? Lady H. checked throughout the paper and didn't find the reason, but in her opinion, this is to create shortcut connections to relieve gradient vanishing during backpropagation in the hierarchical communication.

Such shortcut connection had been used in other neural networks too. The example below came from 2 versions of ResNet, both versions allow the feature map information skip multiple layers and reach to the shallow layer directly.

What do you think? Feel free to share your ideas here!

CasTGAN also has multiple auxiliary estimators (marked as purple squares). Each generator has a corresponding auxiliary estimator to predict the same the variable that the generator attempts to generate. Each auxiliary estimator is a pretrained LightGBM model, it's trained on the rest of variables (from the real data) in order to predict the target variable. The benefit of using LightGBM here is, it can handle categorical variables without using ont-hot encoding.

According to CasTGAN's paper, its cascaded architecture is able to capture the correlations and interdependence between variables, and therefore making generated synthetic data more realistic.

This process becomes clearer when we examine each individual auxiliary estimator. As depicted in the left graph, each auxiliary estimator (LightGBM) is trained using the remaining variables of the real data. It then uses the same variables from the synthetic data to predict the target variable (represented in purple as mi). Simultaneously, the corresponding generator generates the synthetic target variable (represented in orange as mi). Both the purple and orange mi values are sent to the loss function to calculate the generator's loss and the auxiliary loss, and these two loss values are compared.

The auxiliary loss must not exceed the generator's loss, requiring that the synthetic data generated by earlier generators meets a certain quality level. This quality control is managed by CasTGAN's parameters. Through this control, the generated variables should conform to the distributions of the real data, thereby preserving the correlations and interdependencies between variables.

Next, you will see Lady H.'s experiments using these 3 Tabular GANs. Guess which TGAN got better results? 😉

Generate Tabular Synthetic Data

About the Data

If you have already visited Resplendent Tree, you must have heard of our Garden Bank.

Our Garden Bank is renowned for its robust security, which has attracted substantial investments and deposits from clients cosmos-wide. To protect our customers' sensitive data, we employ an innovative strategy that confounds hackers by blending real and decoy data locations. For every genuine piece of data, we create multiple look-alike decoys stored in different locations, making it exceedingly difficult for hackers to identify the true data sources.

One of the key technologies driving this strategy is synthetic data generation, which allows us to seamlessly create these decoys and enhance our security measures!

The deposit campaign data has:

11,162 records

7 numerical variables

9 categorical variables

The target is a binary value, indicating has deposit or not

The target has 52.6% negative records and 47.4% positive records

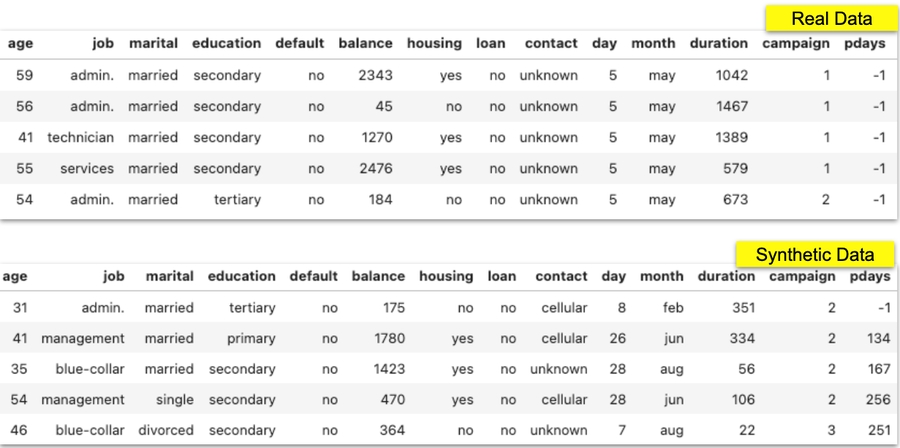

Let's take look at the snapshot of the real data and the generated synthetic data:

Evaluation Metrics

Lady H. used two methods to assess the effectiveness of the generated synthetic data.

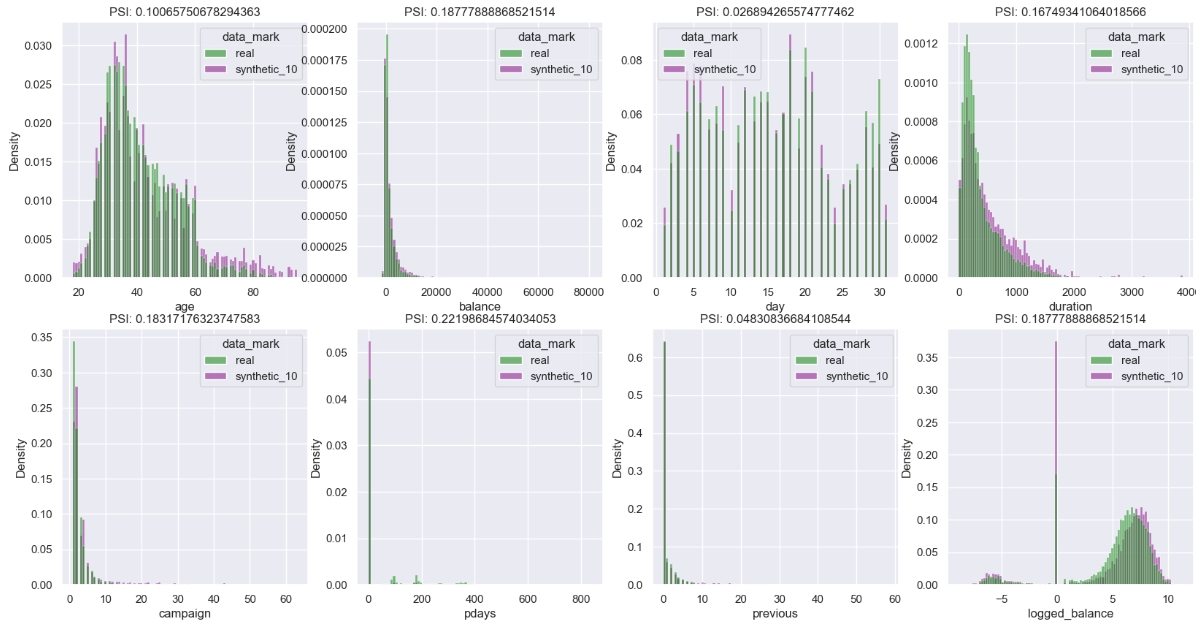

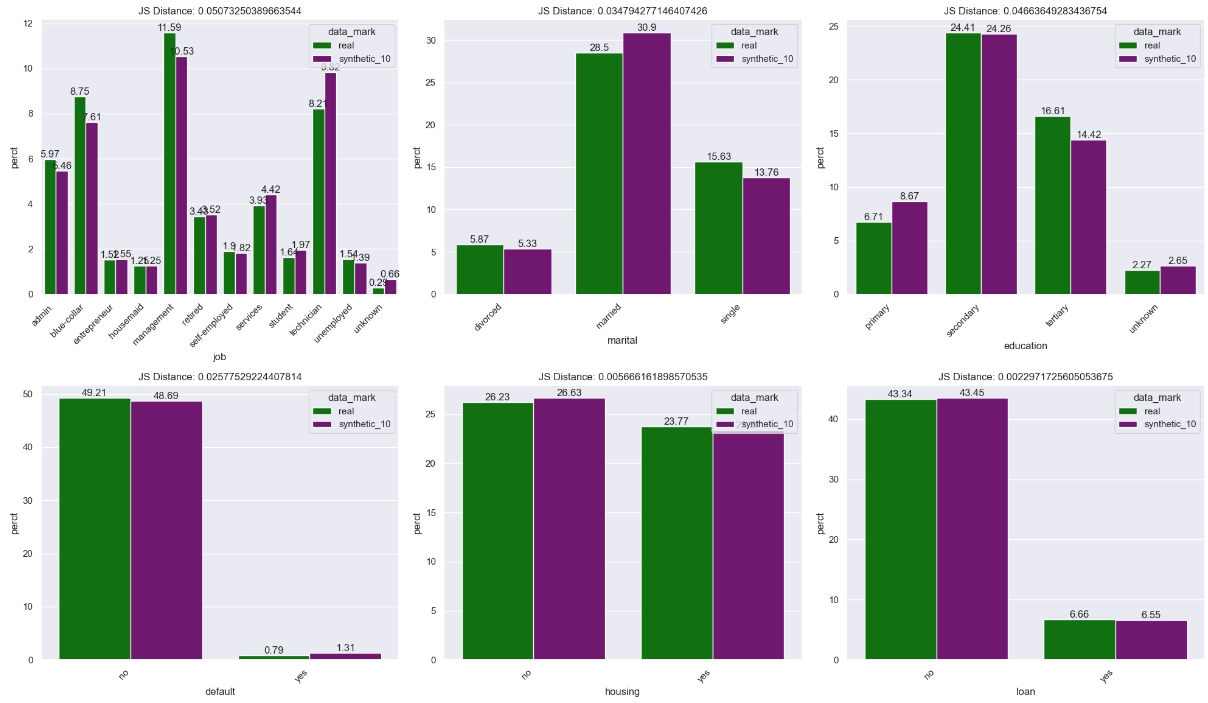

The first method involved comparing the distributions of variables between the real data and the synthetic data. For continuous variables, the PSI (Population Stability Index) score was used, while JS (Jensen-Shannon) Distance was employed for comparing the distributions of discrete variables.

PSI often employees these thresholds:

PSI < 0.1: no significant distribution change0.1 <= PSI < 0.2: moderate distribution changePSI >= 0.2: significant distribution change

Therefore, if more variables have a PSI score below 0.2, or even below 0.1, it indicates that the generated synthetic data closely resembles the real data.

JS Distance can be used to compare the distributions of discrete variables, yielding a score between 0 and 1, where 0 indicates identical distributions and 1 indicates completely different ones. Lady H. set a threshold of 0.1, if more variables have a JS Distance below 0.1, it suggests that the generated synthetic data closely matches the real data.

The second evaluation method was to apply a machine learning model on two training datasets, one came from the real data and the other came from the synthetic data, then compare their performance on the same testing data formed by real data. The smaller performance difference indicates a higher similarity between the real and the synthetic.

Lady H. used the second evaluation method in most experiments after she found it is more effective in making the comparison.

Setup Environment

Follow this guidance to create a virtual environment, it can simplify python packages' installation and their version control.

The required python version is Python 3.9.

Follow this guidance to set up required python packages.

If there will be any package version problem, you can check the full list of python packages here.

Install & Execute TGANs

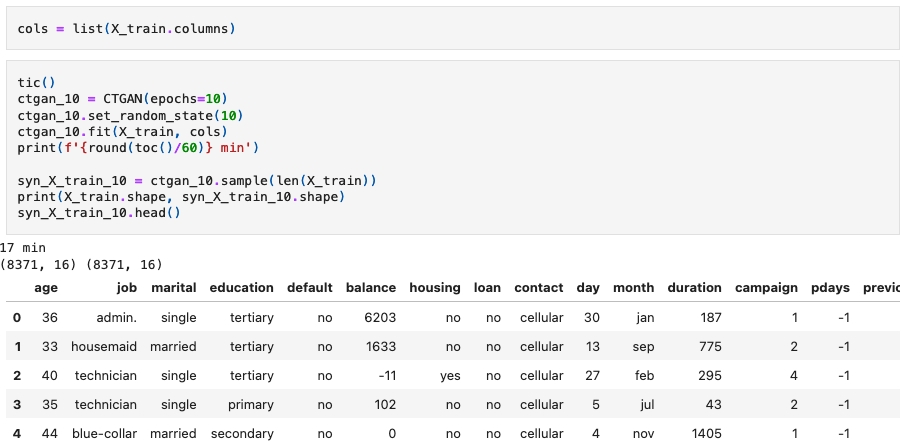

Install & Execute CTGAN

CTGAN was included in

requirements.txtand has been installed during environment setup step.To generate the synthetic data only takes a few lines of code, and you can adjust these parameters. See example code below:

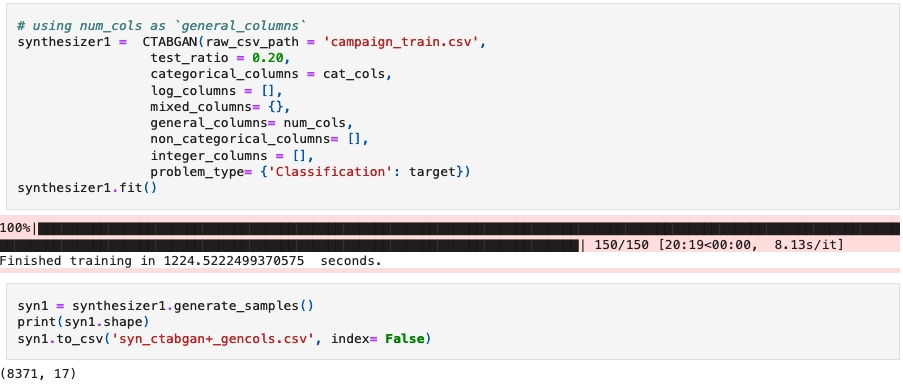

Install & Execute CTABGAN+

CTABGAN+ wasn't included in

requirements.txtbecause it needs manually installation, and it doesn't have a version.Download CTABGAN+ repo by typing

git clone https://github.com/Team-TUD/CTAB-GAN-Plus.gitthrough your terminal.Make sure your input real data is in "CSV" format.

To generate synthetic data, you can adjust these parameters, output has to save as a "CSV" file, and the code looks as below:

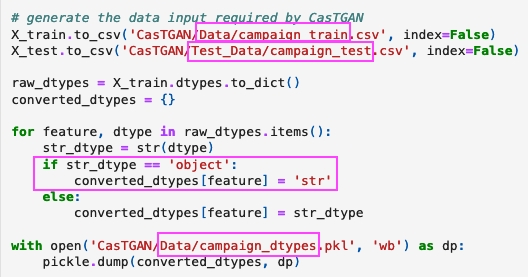

Install & Execute CasTGAN

CasTGAN wasn't included in

requirements.txtbecause it needs manually installation, and it doesn't have a version.Download CasTGAN repo by typing

git clone https://github.com/abedshantti/CasTGAN.gitin your terminal.Edit the code in CasTGAN repo by adding your dataset name in this list. Lady H. added "campaign" in this list.

Edit this code file to update seeds for your dataset. Lady H. updated seeds for dataset "campaign".

Generate the data input as below:

Split the real data into training and testing data.

Save the real training data in CasTGAN's

Data/folder, naming it as "{your_dataset_name}_train.csv".Save the real testing data in CasTGAN's

Test_Data/folder, naming it as "{your_dataset_name}_test.csv".Save features' data type in

Data/folder, making sure categorical features are usingstras data type.

Through your terminal, locate

CasTGAN/folder.Run

python -m main --dataset="campaign" --epochs=10, replace "dataset" with your dataset's name.Besides

epochsyou can specify other parameters in above command, check CastGAN parameters for more.

Experiments

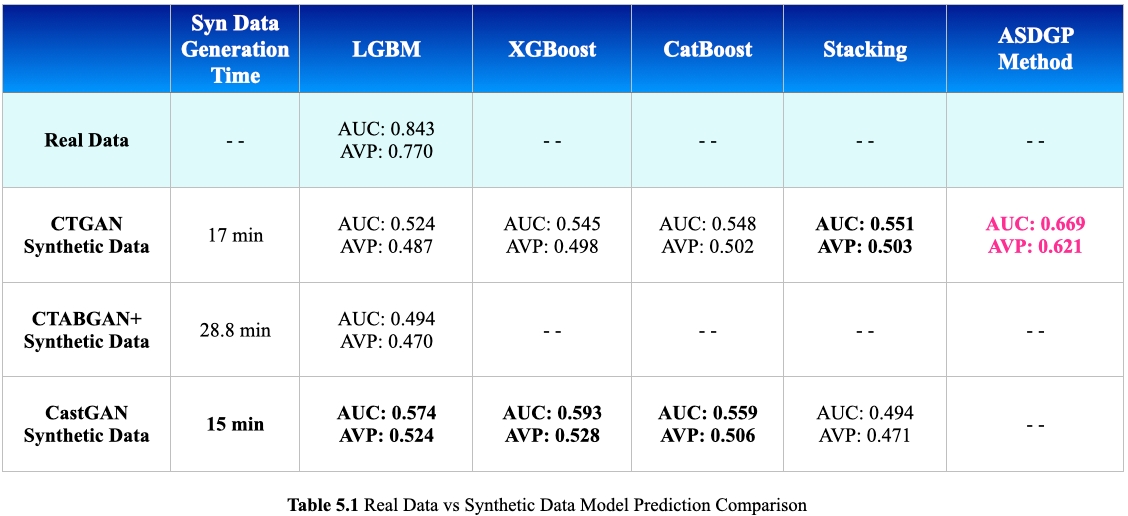

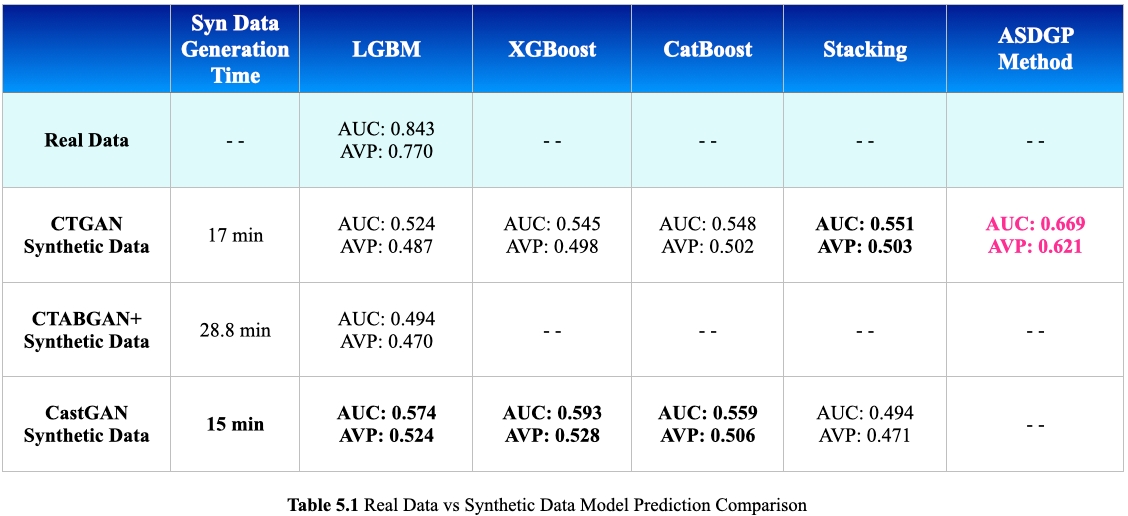

The experiment results are summarized in table 5.1. To do the experiments,

Each TGAN generates "synthetic" training data, which shares the same number of records as the "real" training data.

Every ensembling model was trained using "synthetic" training data and subsequently tested on the "real" testing data. Its performance was then compared with that of the model trained on the "real" training data.

With each TGAN, Lady H. tried different settings and used the best performed synthetic dataset to summarize table 5.1.

To generate the synthetic data:

CTGAN used 17 minutes

CTABGAN+ used 28.8 minutes

CasTGAN used 15 minutes

When comparing datasets' LGBM performance, CasTGAN outperformed. However, both AUC and AVP (average precision score) values were relatively low, falling below 0.6. Considering CTABGAN+ took longer data generation time and got lowest LGBM performance, Lady H. later focused the comparison solely between CTGAN and CasTGAN in subsequent experiments. CasTGAN continued to outperform XGBoost and CatBoost in terms of performance, while the performance consistently to be low. Lady H. applied Stacking as well, by stacking the optimized LGBM, XGBoost and CatBoost, only got worse performance.

Finally, Lady H. got both AUC and AVP above 0.6 by employing "ASDGP Method". How does this method work? 😉

Lady H. observed that the AUC and AVP of synthetic data were consistently lower than those of real data. This prompted her to investigate whether certain features caused more incorrect predictions in synthetic data trained model. To validate her assumption, she did some SHAP analysis.

A higher positive SHAP value suggests that a feature contributes more efforts to pushing the predicted value higher, whereas a lower negative SHAP value indicates that the feature adds more efforts in pushing the predicted value lower.

Firstly, she checked the positive class records where the real data trained model predicted correctly while the synthetic data trained model predicted wrong.

In "Large Positive Proba Difference" chart, she chose records with

real_data_predicted_probability - synthetic_data_predicted_probability >= 0.6.The left SHAP decision plot represents feature contributions from the real data. Organized right-leaning lines suggest features collectively contribute to higher probabilities, the correct class.

The right SHAP decision plot represents feature contributions from CTGAN's synthetic data. Organized left-leaning lines suggest features collectively contribute to lower probabilities, the incorrect class.

In "Small Positive Proba Difference" chart, she chose records with

0.1 > real_data_predicted_probability - synthetic_data_predicted_probability > 0.In both SHAP decision plots, lines are gathered in the middle, there's no clear left or right leaning patterns.

The comparison between "Large Positive Proba Difference" and "Small Positive Proba Difference" indicates, for positive class records, there are some top features affect the prediction differences between real data trained model and synthetic data trained model. When such features play a bigger influence, the differences are larger, and when such features have a smaller influence, the differences are smaller.

Then how about negative class records? Can we find similar patterns? Indeed, yes!

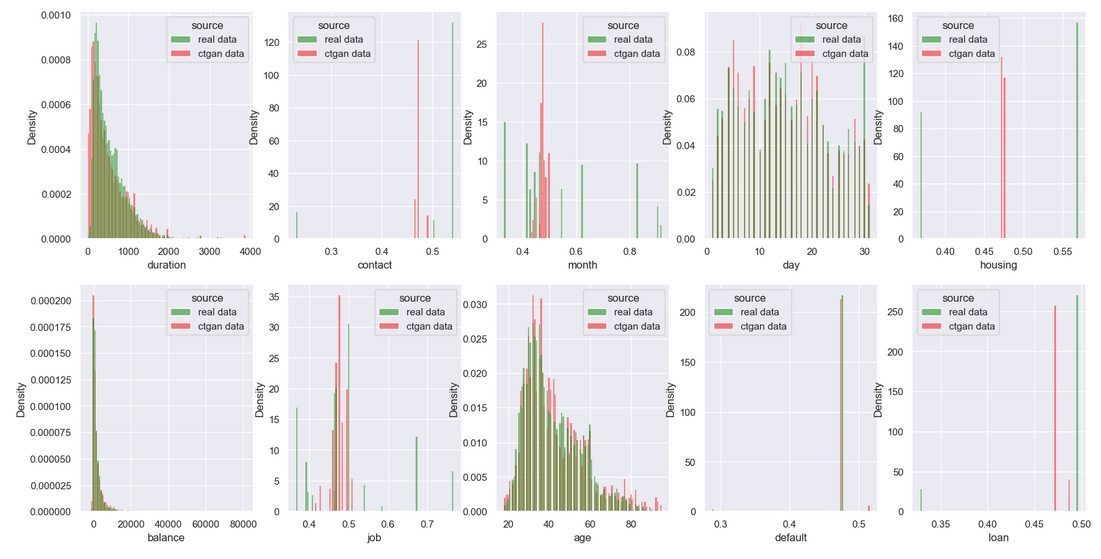

Next, she chose the most "suspicious" features from above analysis, to further check their distributions.

This is the distribution comparison between real data and CTGAN generated synthetic data, for all positive records:

And this is the distribution comparison for all negative records:

Comparing these 2 screenshots, did you find any interesting clues? 😉

Lady H. noticed for features like "duration" and "age", their CTGAN generated features in negative class have lots of overlap with their positive class values, by contrast real data's distributions in these features are better at distinguishing the positive and negative classes. This might be part of reasons why real data trained model performed better in classification. Having this thought, she decided to generate synthetic training data for positive class and negative class separately, hoping this can get more accurate feature distributions in both classes and therefore improve the synthetic data trained model's performance.

She call this method as ASDGP, standing for Adjusted Synthetic Data Generation Process. Yes, it's a made-up name 😉!

By applying ASDGP on CTGAN generated data, the performance finally jumped above 0.6.

Do you have any other ideas to further improve the prediction performance of synthetic data trained model? Would you like to try with CasTGAN? You're very welcome to share your experiments or ideas here!

Last updated