🍃Build an AI-Powered App

Demo - Local Stream

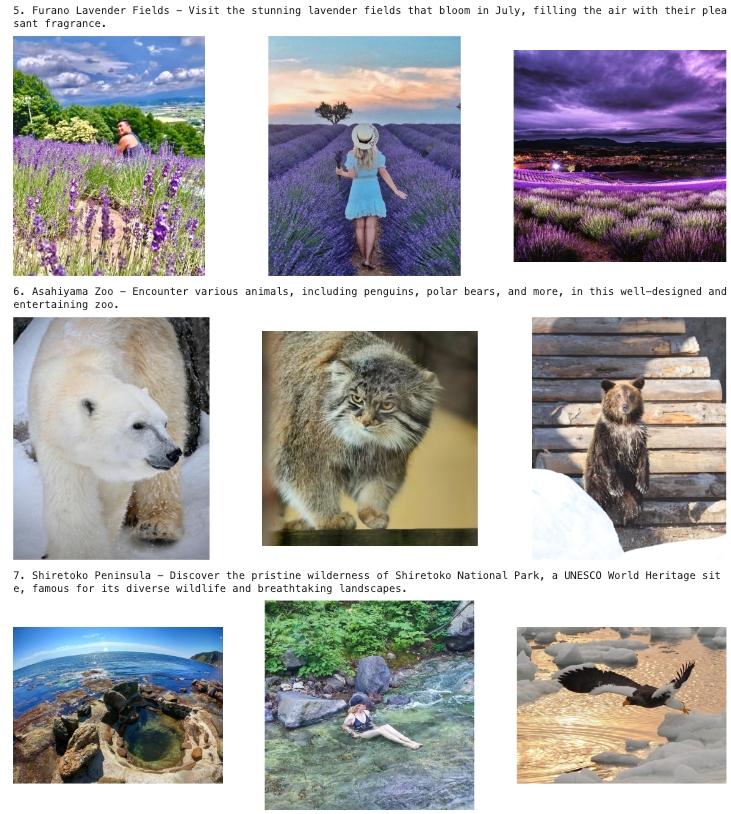

Local Stream is an AI-powered travel recommendation app designed to enhance trip planning. Users simply enter their destination and travel month, and the app suggests recommended locations on a map, complete with photos of popular activities tailored to that season. Created by Lady H., an avid traveler herself, the app was developed to help make travel planning more efficient and enjoyable.

💖 Check out Local Stream here!

As everyone's aware, AI is rapidly advancing worldwide! To pave the way for tomorrow, it's crucial to embrace creativity and leverage AI's potential to its fullest. Creating AI powered apps is a straightforward path to realizing this vision!

Ready to create your own app? 😉 It's free to launch, cost efficient and requires zero web development skill! Let's start now and make it happen!

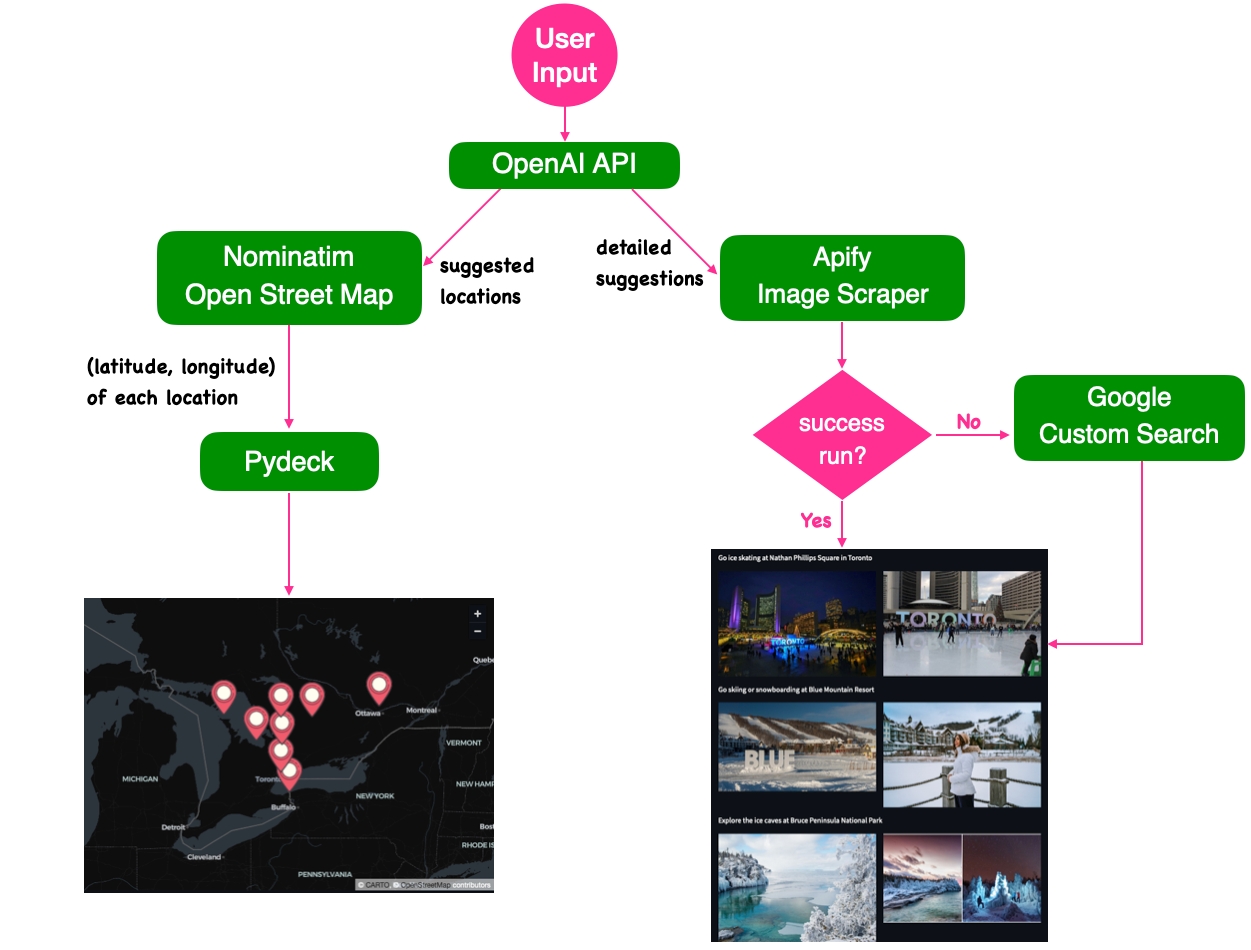

The Workflow of Local Stream

Setup

1. Install Streamlit

To develop and deploy the app for free, we can use Streamlit, a platform that enables data scientists to build and publish apps in Python. Installation is straightforward, just run pip install streamlit.

2. Create and Fund OpenAI Account

In order to intelligently recommend local traveling activities, ChatGPT is a good choice, particularly when seeking recommendations tailored to the selected season. Follow this tutorial to:

create an OpenAI account

create and save the API key

setup billing and fund your OpenAI account

Does word like "billing", "funding" sound scary 💸🤑? Don't worry! ChatGPT is cost-efficient. For the usage in Local Stream, it only charges $0.01 USD every 4, 5 queries.

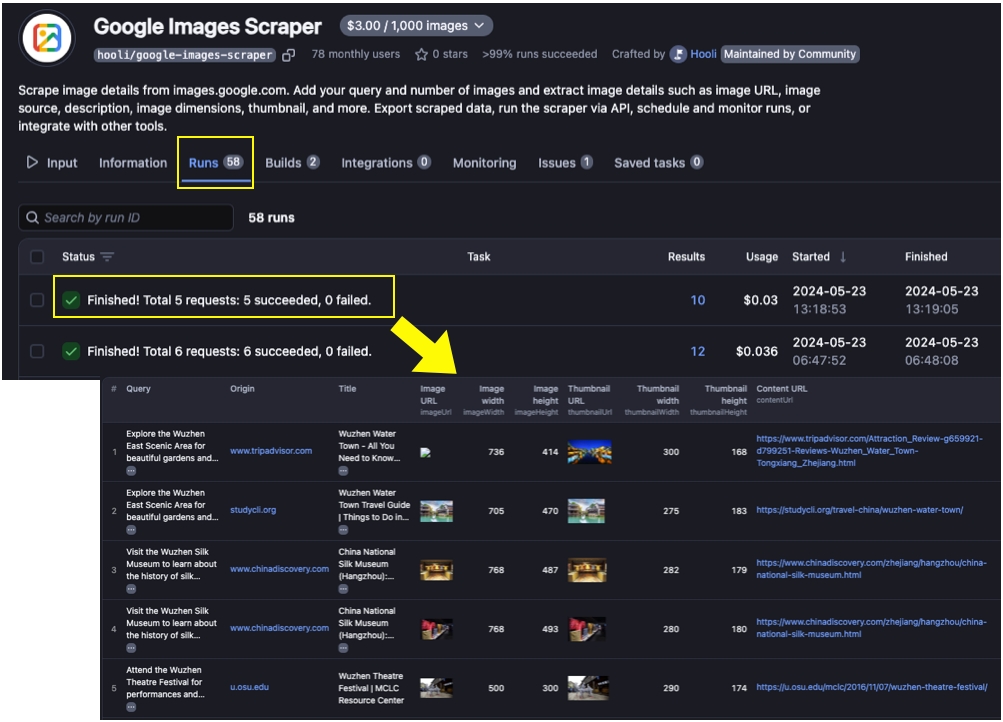

3. Enable Apify Image Scraper

Apify's image scraper delivers high quality image search results. This appears not only in the images' resolution but also their relevance to the search queries. To set up this scraper:

Click Try for free in Apify's Google Image Scraper and sign up for the free account.

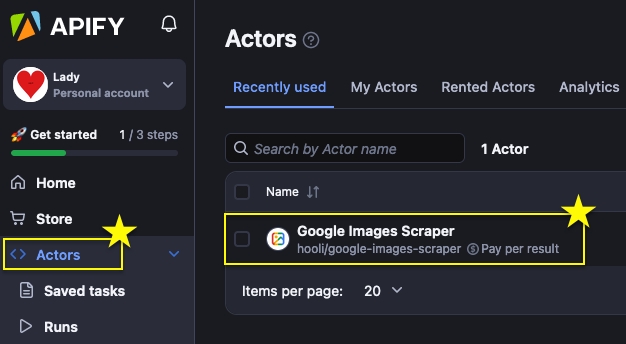

After login, you should be able to see Google Images Scraper in your Actors.

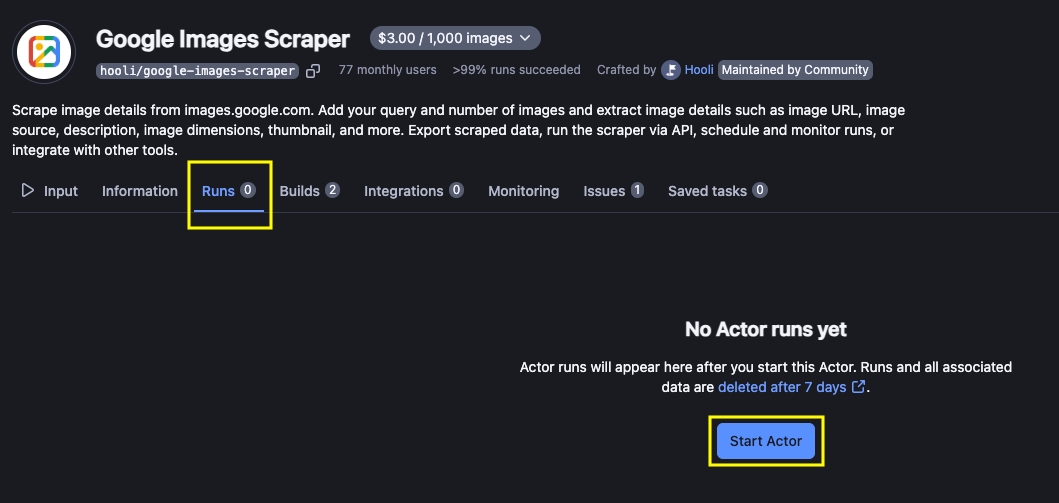

Click Google Images Scraper, then click Runs, followed by clicking Start Actor.

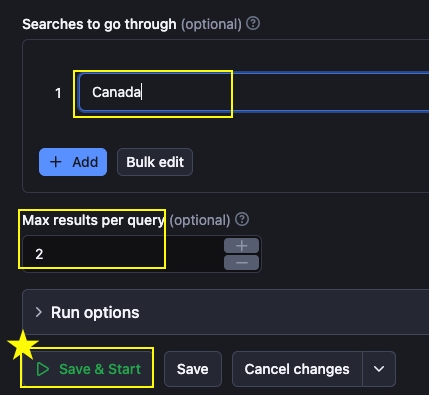

Next, you will see a page where you mainly need to click Save & Start to start the actor. Before clicking this button, you can also specify the image search query and maximum number of returned results. In this example, Lady H. was searching for 2 images of "Canada".

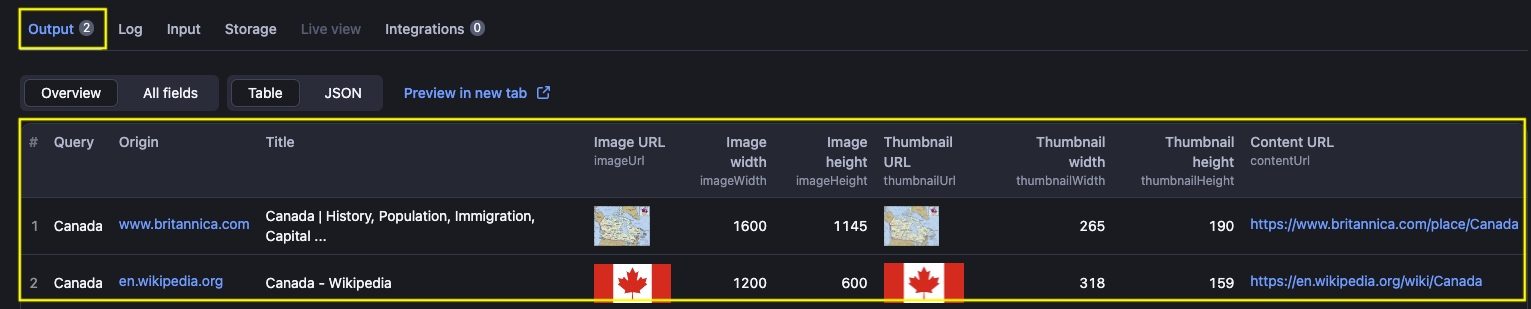

After the actor had been built successfully, you will be directed to the Output page, like this:

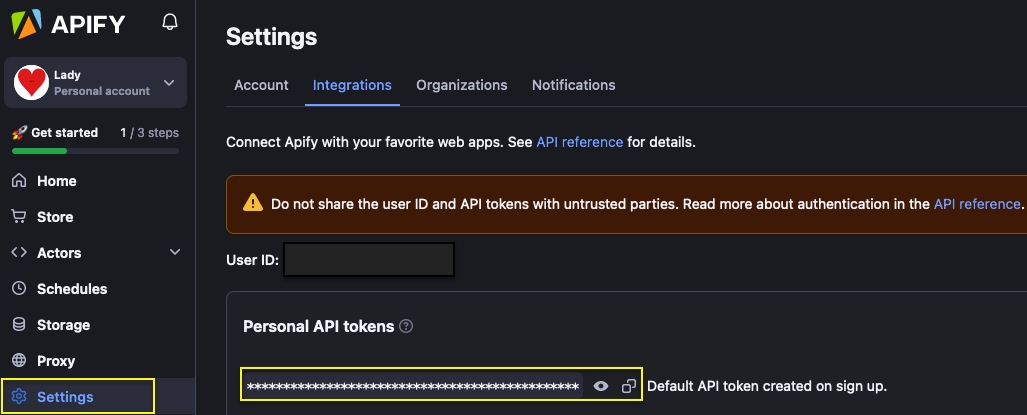

Now go to Settings to get your API token.

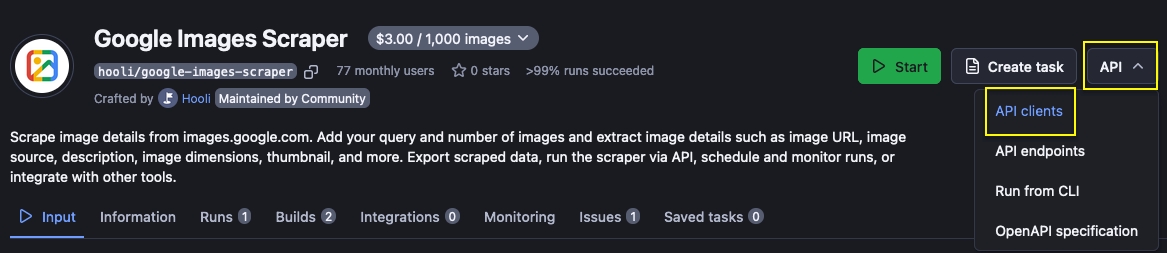

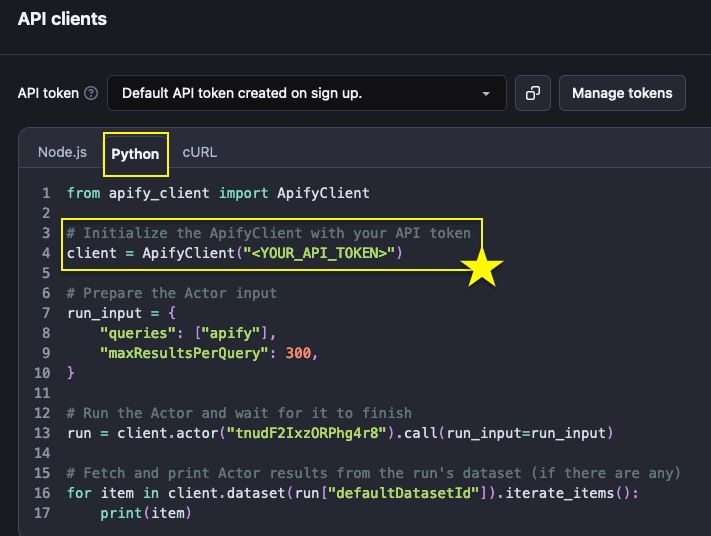

Apify even prepared the while piece of code for you to copy in order to use this scraper. Go back to Google Images Scraper and click API in the top right corner. Then click API clients from the dropdown menu.

The code template is written in different languages, choose Python and copy the code there. To execute this scraper, you only need to replace "<YOUR_API_TOKEN>" with your own API token.

Apify provides $5 dollars monthly for you to use, so if your app is not too popular, this free $5 should be enough as each image search only costs $0.003. Otherwise you can check Apify pricing choices for your purpose. 😉 Tip, you can also create multiple Apify accounts to use the free service for a longer term 😉.

4. (Optional) Enable Google Custom Search

While the chances of Apify failing to return output, such as due to a timeout error, are low, they are not zero. Such occurrences can negatively impact user experience. To ensure smooth performance, consider using an alternative image search solution. Among the free options, Lady H. chose Google Custom Search for its speed and allowance of 100 free queries per day. To use it, you need to create a Google Search API key and create a search engine to get its ID.

5. Other Key Packages to Install

Besides package streamit, you also need to install the following packages:

pip install pydeckto enable the display of suggested locations on the map.pip install openaito use OpenAI API.pip install streamlit-extrasto use extra features of Streamlit.pip install apify-clientto access Apify API.pip install pillowto enable image display from image URLs.

Code Structure

To make Streamlit app work, you need to follow a few rules in the code structure:

Using a requirements.txt file stores all the Python packages and their versions in your environment. This file is essential for setting up the environment during app deployment.

To generate this file, just run

pip freeze >> requirements.txt, it will record all the packages in your environment.

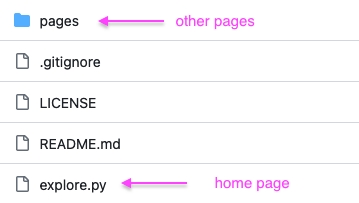

Create a .py file as the app's home page, such as the "explore.py" shown below.

Create a folder named "pages" to store all app pages except the home page, each page in this folder should also be a .py file.

The folder must be named as "pages", otherwise Streamlit won't be able to locate the pages 😉.

🌻 Check Local Stream's code structure here >>

Build Home Page

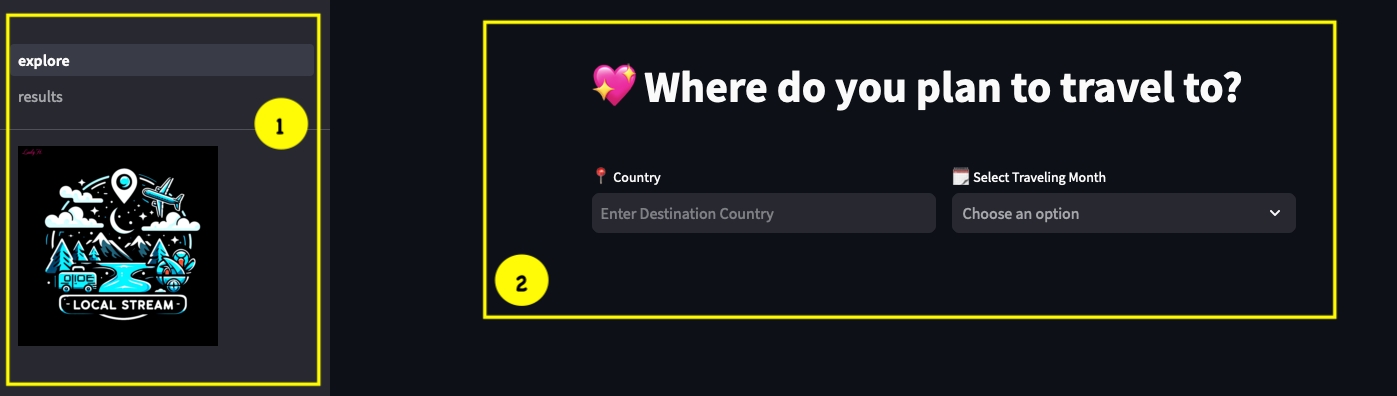

Every page in Streamlit has 2 components:

1st component: The side bar on the left.

Streamlit will automatically detect all of your web pages and list them on the side bar.

You can decide which elements to show on the side var, such as the logo, required user input, etc.

2nd component: The main web elements are on the right side.

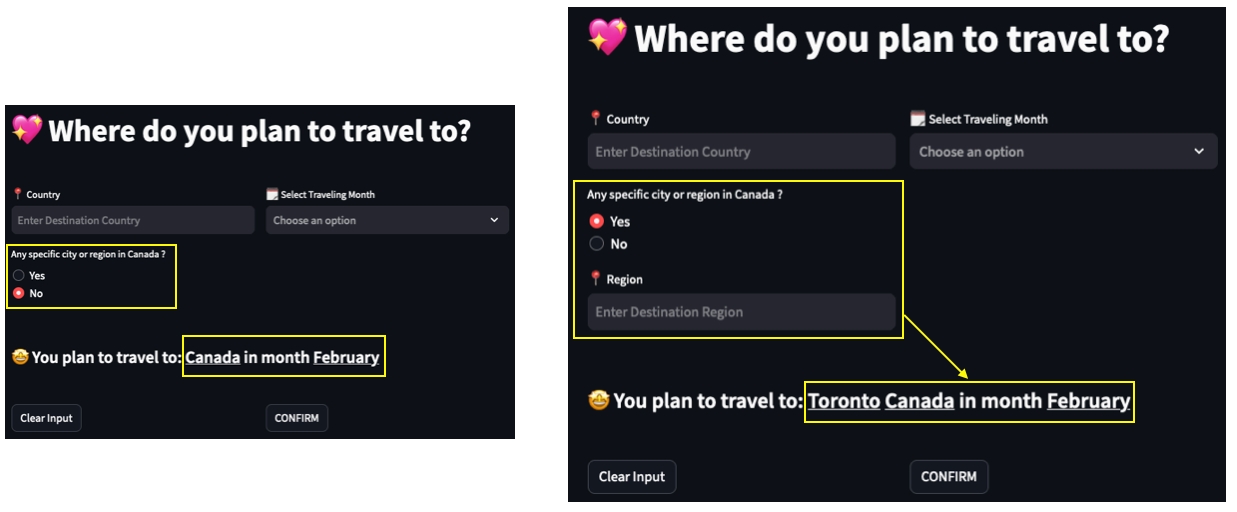

The user interface for Local Stream is straightforward. Users need to input their destination country and the month of their intended travel. Additionally, they can further specify the particular region within the country they wish to visit.

Now let's break down the code of this home page to learn how to build an app through Streamlit.

🌻 Check home page code here >>

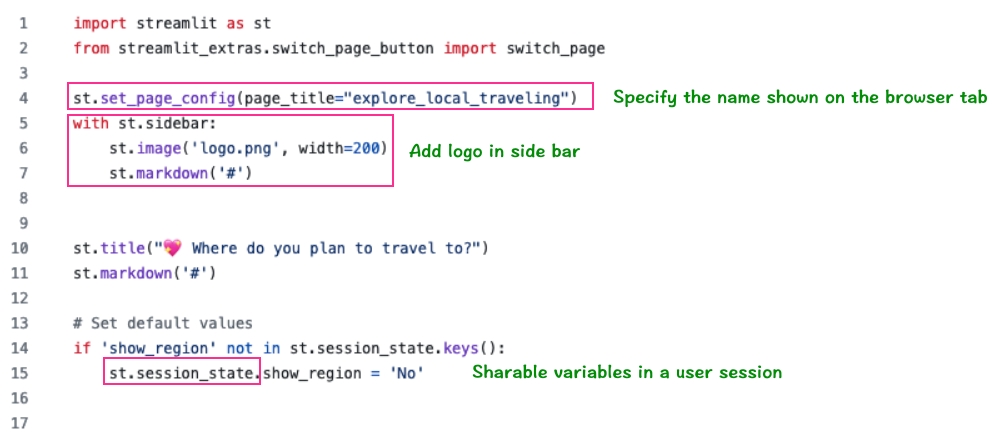

In the code below:

Line 4 specifies the page title, which will be displayed in the user's browser tab.

Line 5 ~ 7 shows how can we add elements in sidebar. The code here is adding the logo image there.

Line 13 ~ 15 is trying to set the default value of "show_region", you will see this web element later. This settings means, by default, the user won't specify the traveling region. It is important to highlight the use of

st.session_state, it stores all variables that can be shared within a user session, allowing these variables to be accessed across different pages of the app and ensuring their values remain consistent throughout a user session.

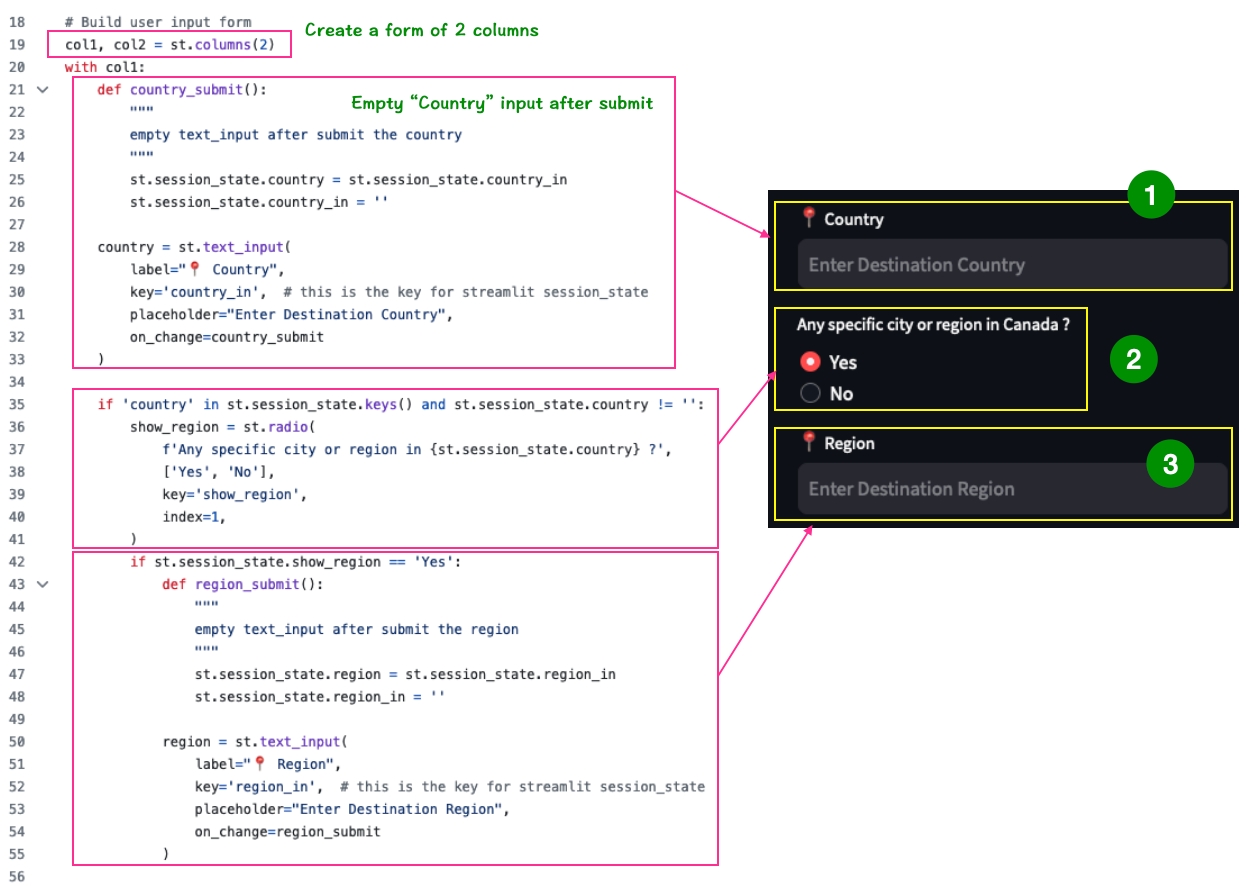

Line 19 ~ 72 creates the web elements on home page:

Line 19 creates a table of 2 columns.

In the first column

col1:A

text_inputis created to submit "Country" value, once the value is submitted, this text input will be cleared. Streamlit provides multiple ways for users to submit the value: they can press 'Enter', 'Tab', or simply move the mouse out of the input field.After the user filled in the country value, a

radiobutton will appear, asking whether to specify specific region. By default, its value is "No", as specified in line 15.If the user choose "Yes" in the radio button, another

text_inputwill appear to let the user fill in the region.

And in the second column col2, a selectbox is created to allow the user to choose the traveling month.

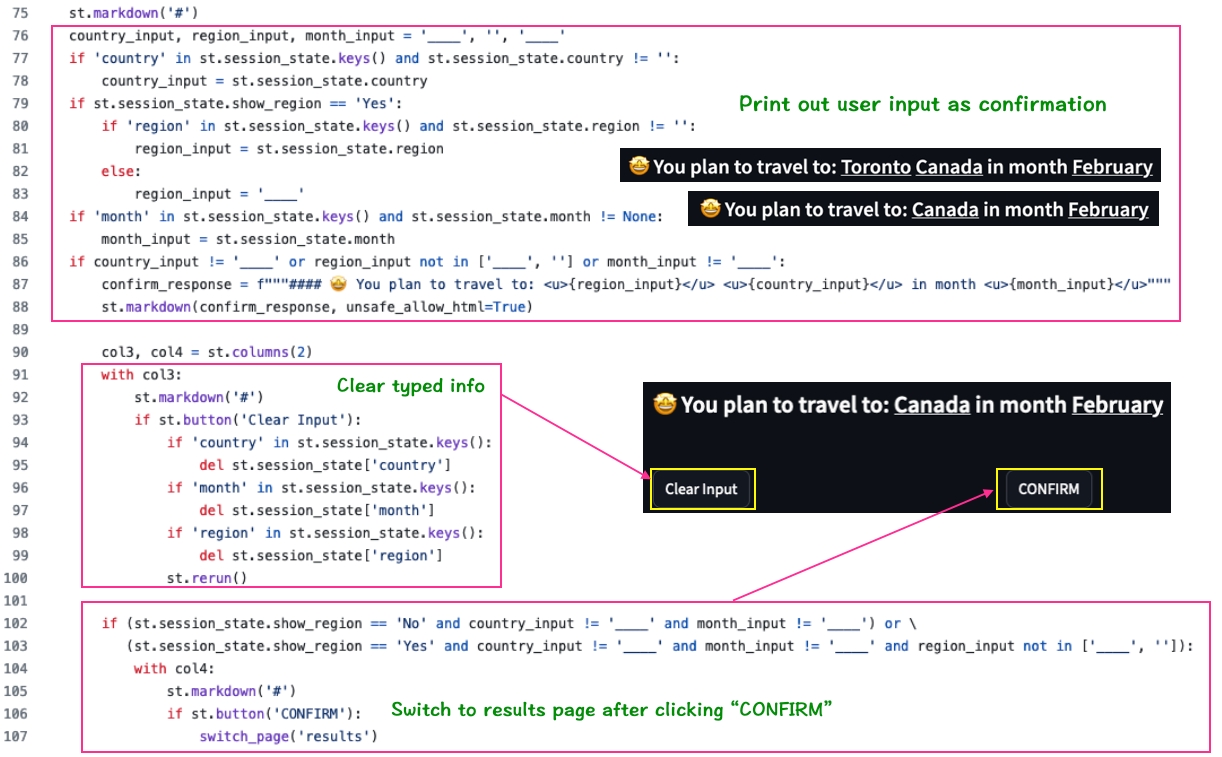

The last component of the home page enables input confirmation and input clean up:

Line 76 ~ 88 will display the user's input, including country, region and selected month.

Line 91 ~ 100 cleans up user input. By clicking "Clear Input" button, the user interface will clean up all the user input.

After the user has entered all the required information, a "CONFIRM" button will appear. Upon clicking this button, the user interface will switch to the results page.

🌻 Check home page code here >>

The loaded results page will showcase a map featuring all the suggested spots at the travel destination. This map offers an overview. Subsequently, for each suggestion, 1 or 2 photos depicting local activities will visualize the suggested experience to users!

Now let's see how to implement the output page!

Build Results Page

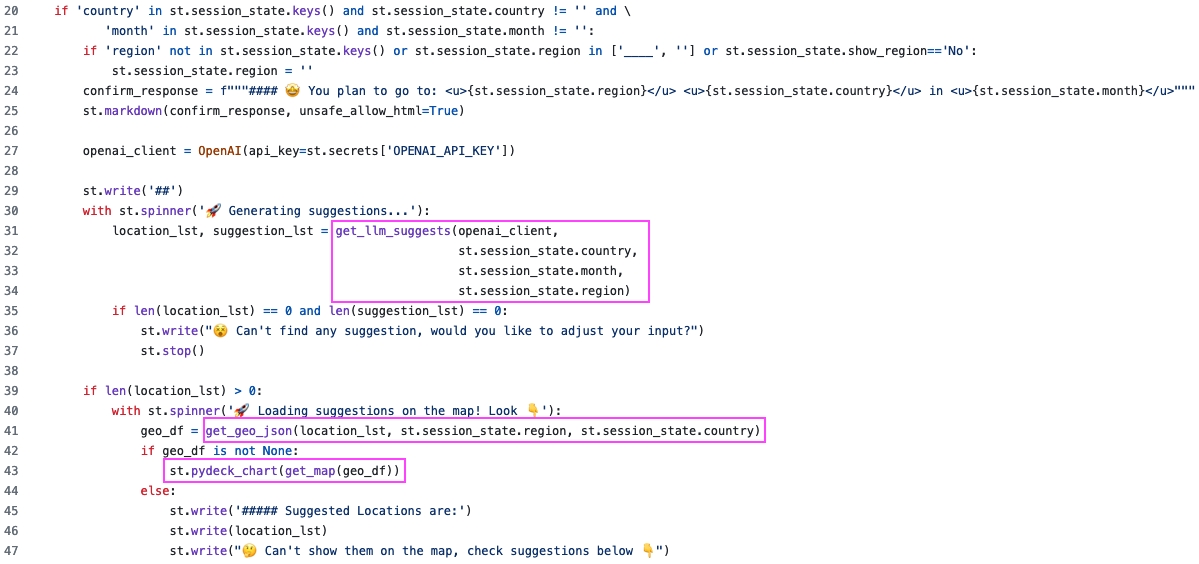

The code below has the key logic of recommendation generation and map display.

get_llm_suggests()uses OpenAI to suggest traveling locations in your destination and local activities in each location.get_geo_json()uses Nominatim, a geocoding software to find the latitude and longitude of each suggested location.get_map()will create a map based on locations' latitude and longitude, then Streamlit's built-inpydeck_chart()will display the map.

🌻 Check results page code here >>

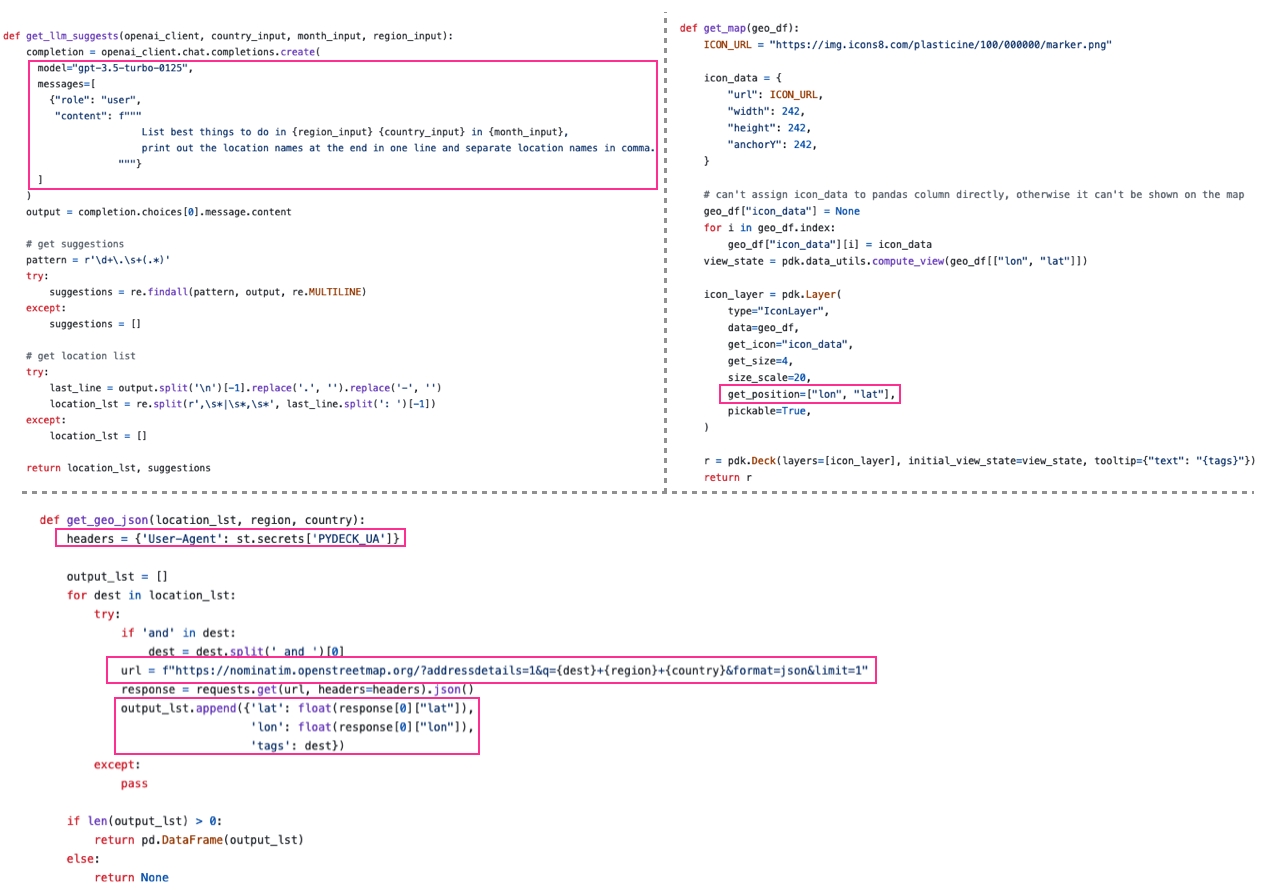

The code below shows the implementation of the 3 highlighted functions, they are implemented in utils.py.

In

get_llm_suggests():Model was using GPT-3.5 Turbo, you can choose more advanced models here.

messagescreates a query that searches for best activities to do in the user-specified region & country & month, as well as listing all those activity locations.It returns suggested location list and detailed suggestions separately.

If suggested location list is not empty,

get_geo_json()will generate geo information using Nominatim service:In order to use Nominatim, you have to specify User-Agent header to identify yourself, this helps the service track the usage and enforce usage policies. Since its main purpose is to identify the user, your can use your email address as the value of

User-Agent.In the code below, Lady H.'s header was saved in Streamlit secrets

st.secrets['PYDECK_UA']in order to keep it private. You will see details in later deployment section.

The geo information can be generated from this type of url

https://nominatim.openstreetmap.org/?addressdetails=1&q={dest}+{region}+{country}&format=json&limit=1, it only needs to fill in thedest,regionandcountry.Its output is a pandas dataframe that stores each suggested location (

dest), together with its latitude and longitude.

get_map()loads the output ofget_geo_json(), with known latitude and longitude of each location, it creates a map usingpydeck.

After loading the map, photos of the recommended local activities will be displayed. For this app, Lady H. prefers to perform up-to-date photo searches to provide users with a current view of local happenings, this is also why the app is called as "Local Stream". Considering price, image search quality, and speed, Lady H. found two available solutions:

Apify enabled image search

Google Custom Search enabled image search

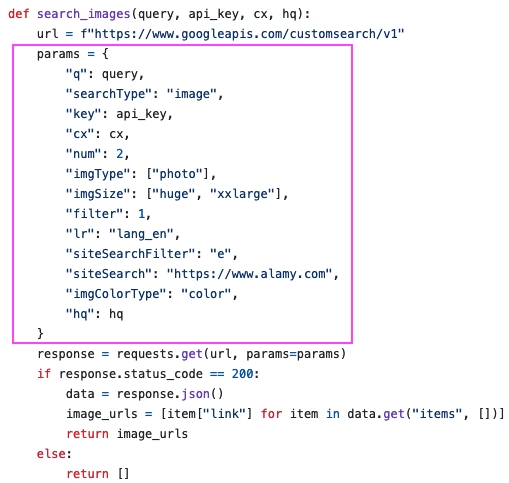

As shown in the code below, the main difference between these two approaches is that Apify executes all the image search queries before loading any images, while Google Custom Search executes a query and loads its images before moving on to the next query. Since Apify's image search is slower, executing all the queries upfront can reduce the total image search and loading time by half.

🌻 Check results page code here >>

Meanwhile, as you can see, the Google Custom Search solution is only used when the Apify solution is not available. Lady H. arranged it this way because Apify's image search generates higher quality and more relevant photos. However, Apify only offers $5 in free credits per month, which sometimes get used up before being renewed. When Apify is not available, Google Custom Search steps in, allowing 100 free queries daily and providing fast results. To narrow down Google search results, you can apply the filters here in the query, but it is always challenging to obtain high-quality image output.

The search_images() implemented in utils.py shows all the filters Lady H. used for Google Custom Search.

Another benefit of using Apify is its logs of every run, which allow you to check execution times, image search results, and more. This can help you better understand the user experience and make further improvements.

The app development work is done! 🎉 Time to deploy the app! 💃

Deploy the App

Time to deploy the app to the public!

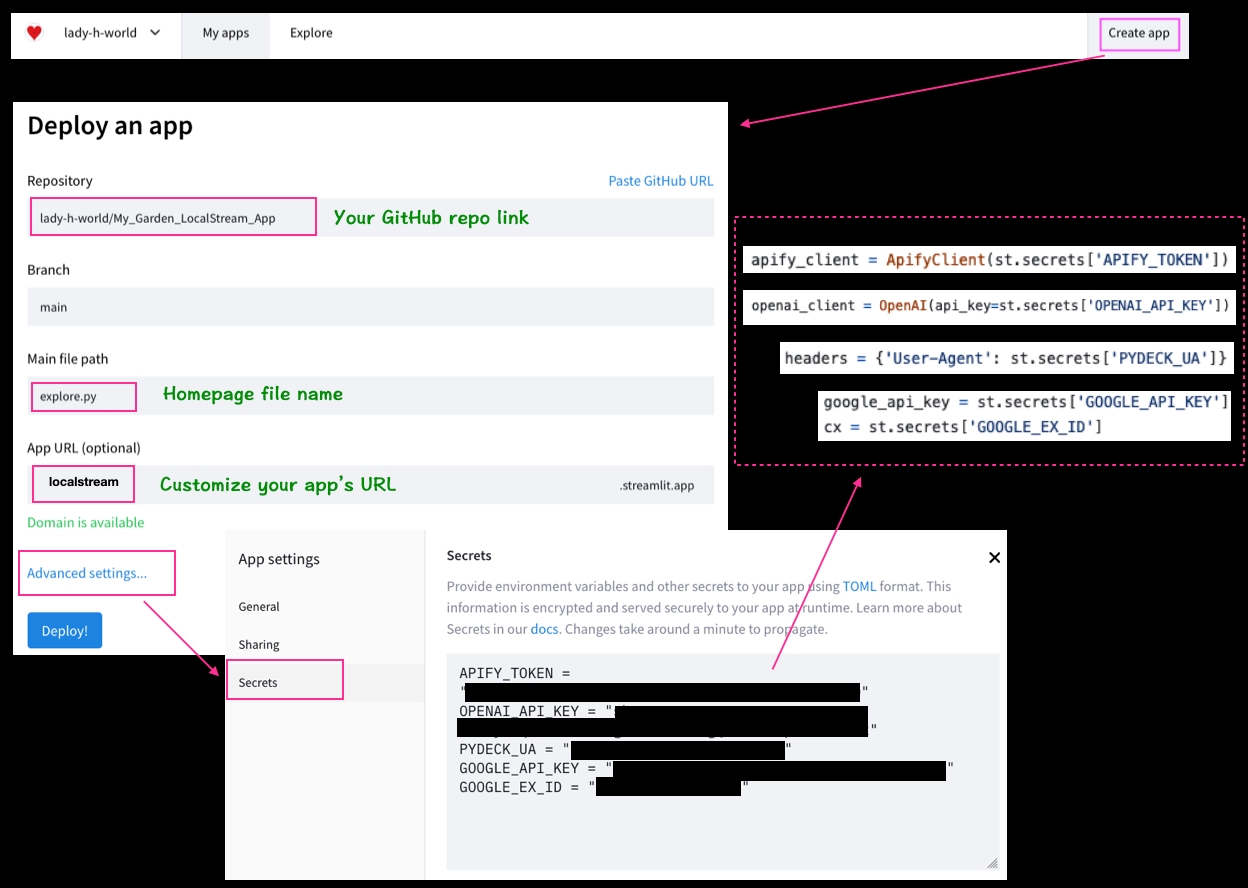

Make sure your code is saved as a public Github repo like this.

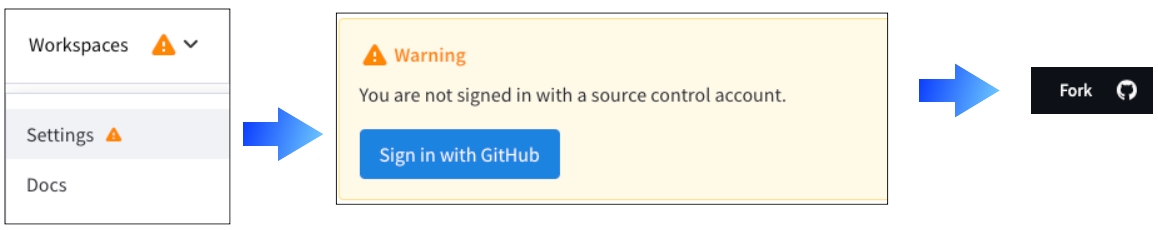

Create a Streamlit account using your Github login. This allows you to link your Github repo to Streamlit directly.

After logging in, click Create app and a form will pop up where you can fill in the Github repo link, homepage file name and customize your app's URL. Click Advanced settings... and then Secrets to save the credentials. To call these credentials in the code, simply

import streamlit as stand then usest.secrets['<credential name>']. After clicking Deploy!, you app will be accessible to the public! 🎉

After the app went public, if you want to allow the public to fork your Github repo. Go to your Streamlit account and you will see an orange triangle near Workspaces, click Settings in the dropdown menu, and then login to your Github account where this app's code sits. After this authorization, you should be able to see the Github logo at the top right corner of your app.

Sometimes, you may need to update your app's settings, such as secrets. To do this, in your Streamlit account, click the 3 dots of your app, followed by clicking Settings and make changes. If the changes don't take effect immediately, click Reboot to ensure they are applied right away.

That's it! We've completed all the steps to deploy an AI-powered app. Now it's your turn to build your own app! You're very welcome to share your app in our community and let's support each other! 💖

💝 A Product Design Story

Local Stream is a simple app, but before achieving this result, Lady H. went through 3 failed versions due to issues with user experience. The key to building a successful product is not just ensuring model performance but also maintaining a good balance between smooth user experience and satisfying model performance. If either factor is lacking, users won't use your app.

At first, Lady H. intended to utilize Instagram to showcase local activities due to its collection of fun photos taken by ordinary people in various travel destinations. These photos are up-to-date and available for free. In an effort to search for the most relevant photos on Instagram, Lady H. experimented with sentiment analysis and different ranking formulas. However, Instagram has strong security settings, once it detected bot-like activities, it prompts additional manual authentication steps. Lady H. couldn't find a way to automatically bypass these authentication requests, so she had to explore alternative solutions for image search.

Here are the examples of image search using Instagram:

It's unfortunate not being able to use Instagram photos. However, every cloud has a silver lining! Lady H. later discovered that the image search results provided by Apify Image Scraper or Google Custom Search are both more relevant and specific compared to Instagram. Additionally, to achieve the desired user experience, Lady H. experimented through trial and error to find ways to ensure image search quality while reducing image search and loading time.

Initially, OpenAI wasn't the first choice for providing travel suggestions. Lady H. experimented with web scraping to extract recommendations from popular travel websites and also tried renowned tools like Langchain. However, after weighing factors such as output quality, user experience, and financial cost, Lady H. ultimately decided to use the OpenAI API.

Building a product offers numerous learning opportunities. Lady H. wonders if her personality belongs to a minority group that cannot stop thinking about better solutions until a satisfying one is found. How about you? Share your product development stories here!

Also, keep in mind, friends, that sometimes you need to take a good rest first. After returning to work, you may find that you have even better ideas!

Last updated